Identity 2.0 on Digital Dehumanisation

and creative outputs with digital tools

An article by Arda Awais and Savena Surana (Identity 2.0)

Where do you start when creating a more equitable digital future? Maybe an end to misinformation online? political targeting? Create digital platforms that don’t solely exist to vacuum up our data? Or having a disclaimer so AI run systems aren’t seen as absolute truth?

To do any of these things we need to have a collective imagination – to dream beyond the boundaries of our current digital landscape. In the shared effort to build a fairer digital society, we must include those from a creative discipline. It is not just coders and inventors who build the future, it’s the artists, the hackers and the UX designers.

To tackle digital dehumanisation, we must think creatively. We have the opportunity to build something different. The outcomes do not have to be within the guidelines of what technology can do today, because we can build machines in a new way.

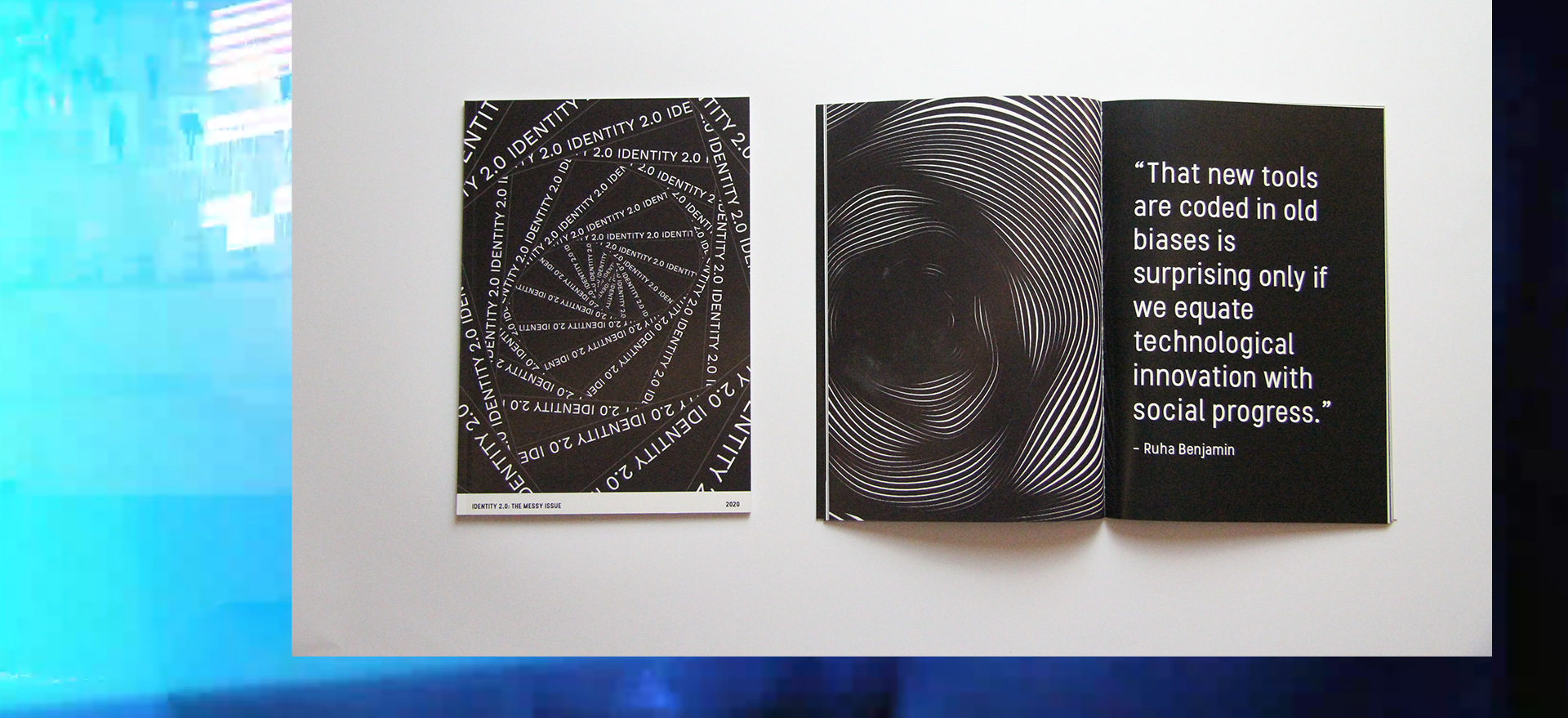

At Identity 2.0, creativity is at our centre. We have worked independently and with clients to produce work which explores our relationship with technology.

Our work has explored the decolonising data movement, creating joyful digital spaces and listening to the views of young people about AI. Sometimes, we use technology to do this, but it is not the first tool we run to. We first research to learn about the work that’s already been developed and think about the emotive journey we want our audiences to go on. Engaging experiences can be developed without technology and not every creative route using it has been a success. We have learnt a lot along the way, especially as new research is shared and the community to tech resistance grows. We reflect on our practice and the tools we use with each new project.

Technology is exciting for precisely the reason it can be so dangerous – it has immense power to change society. And therefore the harms it can enact are widespread and ever reaching.

This also allows people from all walks of life to engage in resisting digital dehumanisation. For example, we’ve seen platforms for people who are campaigning for workers rights, environmentalists or those fighting the surveillance state. That’s why Stop Killer Robots’ decision to adopt the concept of Digital Dehumanisation as a core tenant to their fight against automated harm makes sense. This is because digital dehumanisation produces a spectrum of harm and autonomous weapons, aka killer robots, are just at the extreme end of the line. By situating itself in this wider conversation, we can see the type of intersectional support that is needed to galvanise a movement like this.

This works both ways – not only can other forms of resistance lead people to supporting the campaign, the Stop Killer Robots campaign can open people’s eyes to why other forms of digital harms must be stopped too. Talking about killer robots generates an innate human response in your gut – it’s unsettling. This feeling is a useful gateway to discussing the wider digital harms. Trying to explore why facial recognition technology is harmful to a productive society might not provoke the same emotive response on its own, but it can be just as harmful.

It’s worth saying that not all digital tools lead to digital harm. There are some really exciting innovative projects, such as Mozilla’s AI investment, indigenous scientists changing AI or even using AR projects to build empathy.

And just as using technology can help create platforms, it can also provide a knowledge hub for people to learn. In 2021, we developed a timeline which explored the hyper visibility and invisibility of Black bodies throughout history in terms of surveillance – this was a part of an exhibition experience. We decided to publish this work online the following year which led it to become a resource in academic courses and a way for further exploration into the topics. Digital spaces do have the power of reaching greater audiences and can help amplify your work.

But when using any digital tools it’s important to weigh up the ethical considerations. From the environmental impact of using AI to the unfair labour conditions that the tech is produced under. These aren’t easy things to grapple with, and we all know there is no ethical consumption under capitalism. But who said we can’t try?

It’s all about being able to balance the ethical concerns and have a human rights approach to digital tools – or rather think about digital rights when using technology. What are the human costs vs the desired outcomes? We need to see this slower and more considered route as an integral part of using technology, rather than a barrier to progress.

At Identity 2.0 we are not anti-technology, we’re just pro-people. Pro considering what it means to produce joy and reduce harm when it comes to digital tools. We know it’s not as catchy but we have to start from somewhere.