No Dehumanising Tech

Laura Nolan is a computer programmer who resigned from Google over Project Maven. She is now a member of the International Committee for Robot Arms Control (ICRAC) a founding member of the Campaign to Stop Killer Robots. #TeamHuman.

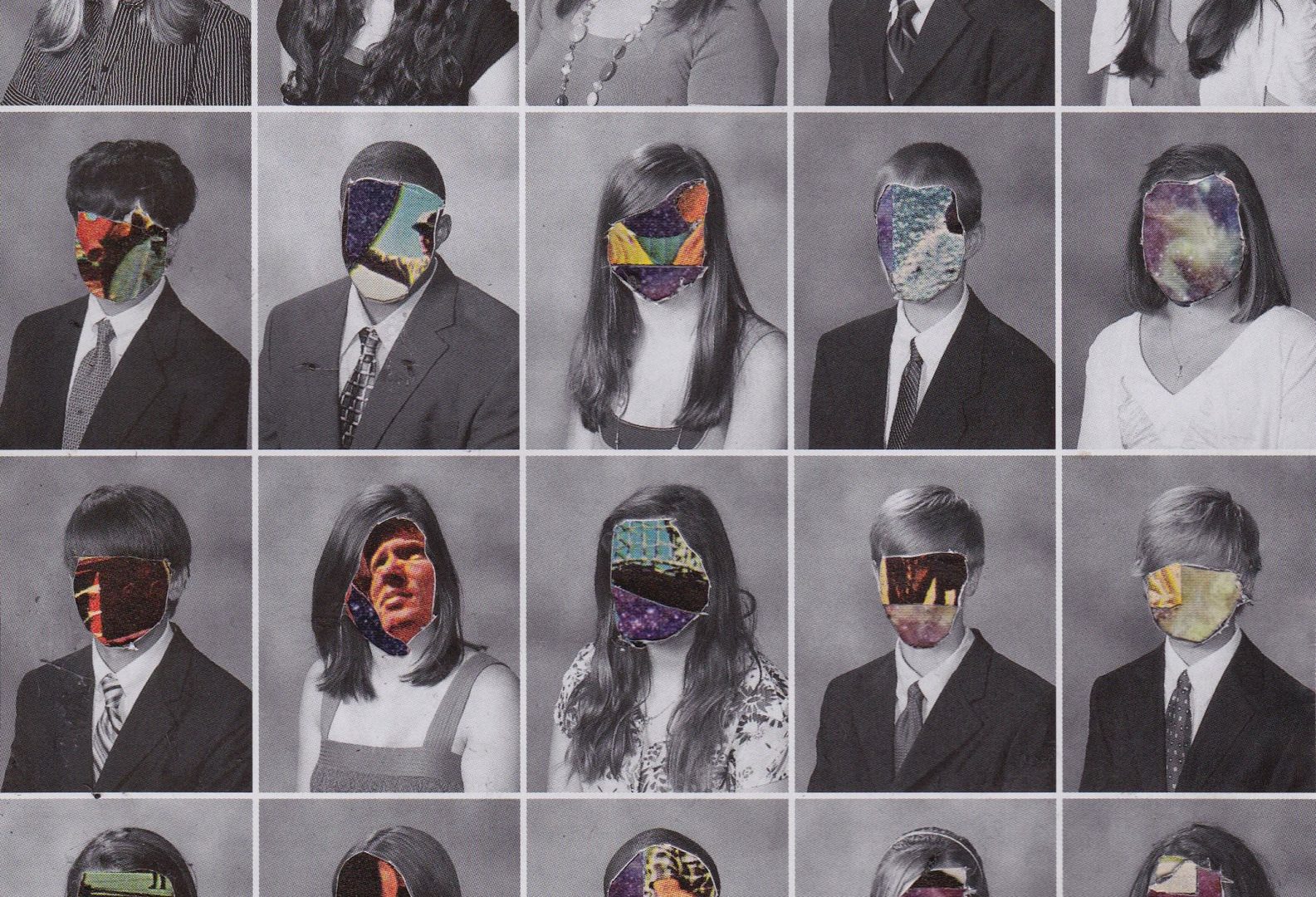

Photo by John Sloan Photography (CC BY-NC-SA)

Dehumanisation is the failure to acknowledge the humanity of others. It is a denial of human dignity — the intrinsic value of human persons — which is one of the cornerstones of human rights and of modern ethical thought. Conversely, dehumanisation can be the first step towards war, genocide, and other atrocities.

How, in the 21st century, do we build and operate computerised social welfare systems that starve benefit recipients to death or deny basic entitlements to those less able to navigate a complex software-mediated application process?

We may not be treated as fully human by the organisations we interact with when we are reduced to a record in a datastore. Computer systems now operate most bureaucratic processes, such as welfare systems, and they distance staff from the effects of the system. As the renowned sociologist and philosopher Zygmunt Bauman writes, ‘practical and mental distance from the final product means […] most functionaries of the bureaucratic hierarchy may give commands without full knowledge of their effects. In many cases, they would find it difficult to visualize those effects.’

Software engineers who build the decision making systems are usually even further removed from the effect of the systems we build than senior managers are. Intentionally or not, we often build rigid systems which lead to worse outcomes for many individuals than older bureaucracies staffed by human beings with the power to make decisions and to accommodate exceptions.

Welfare systems are only one example of this kind of phenomenon. Ibrahim Diallo was repeatedly marked as no longer employed by the HR systems at his employer. He was effectively fired by the computers.

The COVID-19 pandemic has seen an increase in the number of software-mediated interactions in most of our lives. Employees have railed against the dehumanising effects of automated surveillance by employers. Students taking exams have spoken about the impact of proctoring software which draws attention to their disabilities, doesn’t work with their skin colour, and is invasive.

Being scrutinized, evaluated, and having decisions made about us by software often feels unfair and dehumanising. We know that individuals can be subject to unfair outcomes as a result of automated decision making or profiling — this is the reason for the existence of Article 22 of the European Union’s General Data Protection Regulation:

The data subject shall have the right not to be subject to a decision based solely on automated processing, including profiling, which produces legal effects concerning him or her or similarly significantly affects him or her.

Citizens of wealthy Western nations often have some legal protections against the most damaging manifestations of digital dehumanisation. This is less true for other people. The story of the United States Immigration and Customs Enforcement (ICE) Risk Classification Assessment tool is a cautionary tale. In 2017 this tool was modified to always recommend detention of any undocumented immigrant, in a departure from years of practice. Officers could override the tool’s recommendation, but few did and detentions skyrocketed.

Photo by ev.

Bauman points out that ‘[u]se of violence is most efficient and cost-effective when the means are subjected to solely instrumental-rational criteria, and thus dissociated from moral evaluation of the ends.’ Nothing seems more rational than a recommendation made by a computer, however flawed that decision may be in context.

In an organisation where decisions are mediated by software, responsibility for outcomes is thoroughly diffused. Those of us who build the decision making systems are judged as specialised workers: does our code work? Is it efficient and fast? Did we add the new features that were requested?

Management of such an organisation are far from the ‘sharp end’, where benefits are denied, where immigrants are detained, where test-takers are accused of being cheats because they cannot stare at a screen unblinking for hours. Rank-and-file staff members are expected to work within the system, and their easiest course of action is to follow the recommendations of the software that they work with.

As Bauman concludes: ‘the result is the irrelevance of moral standards for the technical success of the bureaucracy [emphasis Bauman’s].’ A bureaucracy — as all large modern organisations are, from organisations that administer social welfare to a military — cares only for efficiency and technical success. Computerised decision making tends to reduce human judgment even further in importance. ‘Morality boils down to the commandment to be a good, efficient and diligent expert and worker,’ per Bauman.

Dehumanisation is the antithesis of human dignity and respect for human rights. The only logical end to the rise of digital dehumanisation is to automate the decision to end human lives — to build and use autonomous weapons.

As computerised decision making systems become more prevalent in our education, work, welfare, and justice systems, the upholding of human dignity and human rights must be a priority. Software engineers are not judged on our contribution to upholding human rights in our end-of-year performance evaluations. Nevertheless, we have an obligation to understand and to mitigate the negative impacts of the systems we build — and to refrain from building systems that harm and dehumanise.

In 2019, large parts of the software industry said ‘No tech for ICE.’ That’s a great message and it bears repeating—but we need to go bigger: ‘No dehumanising tech.’

Photo by Maxim Hopman

To find out more about killer robots and what you can do, visit: www.stopkillerrobots.org. If this article resonates with you as a technologist, check out the Campaign to Stop Killer Robots resources for technology workers: www.stopkillerrobots.org/tech.

- Zygmunt Bauman, Modernity and the Holocaust (Polity Press, 1989), 115.

- Zygmunt Bauman, Modernity and the Holocaust, 114.

- Zygmunt Bauman, Modernity and the Holocaust, 117.

- Zygmunt Bauman, Modernity and the Holocaust, 115.

Original Article on Medium.com.