Digital Dehumanisation — When machines decide, not people

Isabelle Jones is Campaign Outreach Manager for the Campaign to Stop Killer Robots. She leads efforts to expand and strengthen the campaign's network, and provides support for campaigners through funding, resources, and coordination to build momentum and energize the movement.

We didn’t expect a Campaign to Stop Killer Robots to be needed in the world — but it is. Globally, we are seeing a growing influence of computer processing and algorithmic thinking: taking “There’s an app for that!” to a whole new level. From ‘smart houses’ and the Internet of Things to facial recognition and predictive policing — we are increasingly driven towards so-called technological ‘solutions’. Technologies heralded as prioritizing precision, efficiency, and speed are presented as silver bullets. But at what cost? This techno-solutionism is promoted without consideration of the data biases inherent to the design of the technologies, or the consequences of removing human understanding of context and nuance from the structures we live in and the challenges we face.

When machines decide, not people

At the far end of a spectrum of increasing automation are weapon systems that are increasingly enabled to use computer programming and sensors to identify and select targets, over wider areas and longer periods of time. This means less direct human engagement and understanding of what is happening in an attack — it means getting closer to machines making decisions over whom to kill.

Weapons systems like these present a number of ethical, moral, legal, technical, and security concerns.

We need to draw a line. Our humanity, our complex identities, should not be reduced to physical features or patterns of behaviour, to be analysed and pattern-matched by systems unable to understand concepts of life, human rights or liberty. People wouldn’t be seen by killer robots — they would be processed. This digital dehumanisation would deprive people of dignity, demean individuals’ humanity, and remove or replace human involvement or responsibility through autonomous decision making in technology.

We want to build a future that rejects systems that reduce living people to data points, to be automatically profiled, processed and subjected to force.

Photo by arvin keynes.

What we’re doing to counter digital dehumanisation in 2021

Government representatives have been grappling with what this means for international humanitarian law (the ‘laws of war’) since 2013, but last year we saw diplomatic progress grind to a halt in the midst of the COVID-19 pandemic and a lack of political will to push forward. Discussions have yet to resume. Despite set-backs, we know that 2021 and 2022 have the potential to be transformative. They will be years of transition, of evolution, and opportunity.

We are encouraging new leadership and representation from within the campaign’s staff team and our wider membership. We are working hard to reflect the diversity of people, organizations, skills, expertise and perspectives that our global coalition brings together. We are going to make the most of 2021’s potential—increasing political pressure, building policy coherence, broadening our community, engaging dynamic stakeholders and partners, and creating a strong foundation for the negotiation of the treaty we believe in. A treaty that rejects the automation of killing and instead holds onto the fundamentals of humanity, valuing each other not through calculation but through understanding, promoting the principle of human control over the use of force and emerging technologies.

We didn’t expect a campaign to Stop Killer Robots to be needed in the world — but it is.

To avert the realisation of autonomous killing, states need to prohibit all systems where sensors are used to target people. This is one of three pillars that the campaign to Stop Killer Robots believes is urgently needed in an international treaty to retain meaningful human control over the use of force. And as we settle into 2021 it is needed more than ever before.

So let’s make the most of the opportunity we have to prevent the ultimate manifestation of digital dehumanisation. Let’s take advantage of this opportunity to prevent the loss of life and limb we have seen from the use of other indiscriminate weapons. Let’s work together to ensure we don’t allow creeping automation to replace human decision making where it should be most present.

There is growing recognition by the international community that weapons systems lacking meaningful human control cross a critical threshold and must be prohibited, and public opposition to fully autonomous weapons remains strong. So we are not alone. Our campaigners are working to build support for a treaty by engaging with concerned states, supporting efforts to convene “digital diplomacy” meetings, and prioritizing political outreach and incredibly innovative national campaigning amidst the realities of a pandemic. And we want you to join them.

We didn’t expect a campaign to Stop Killer Robots to be needed in the world — but it is.

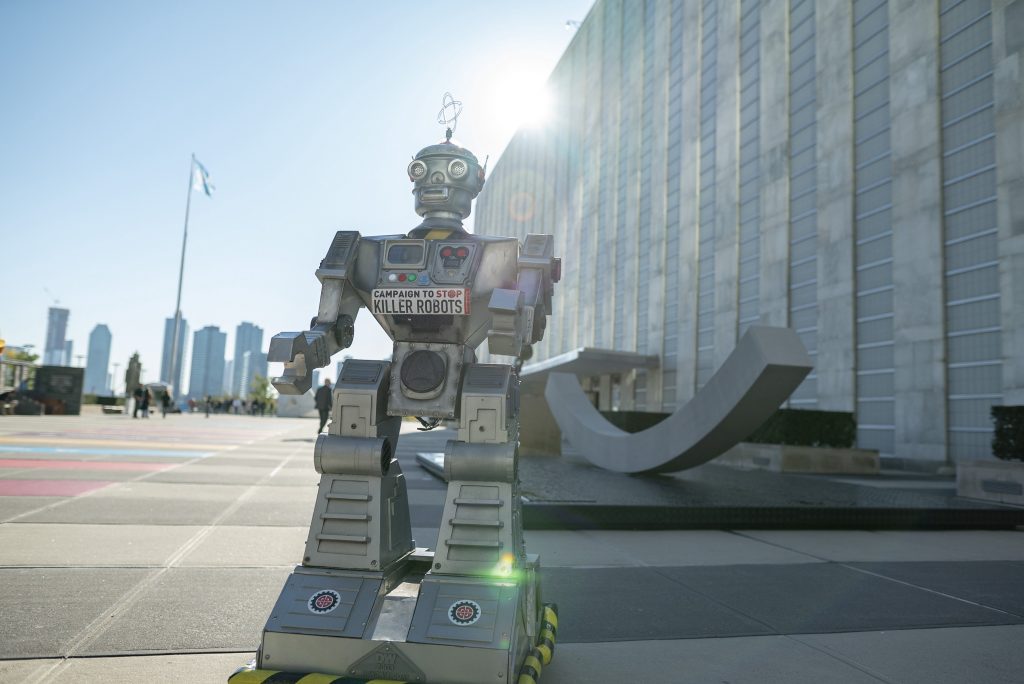

The Campaign to Stop Killer Robots robot outside the UN building in New York. Photo by Ari Beser

Original Article on Medium.com.