Rise of the tech workers

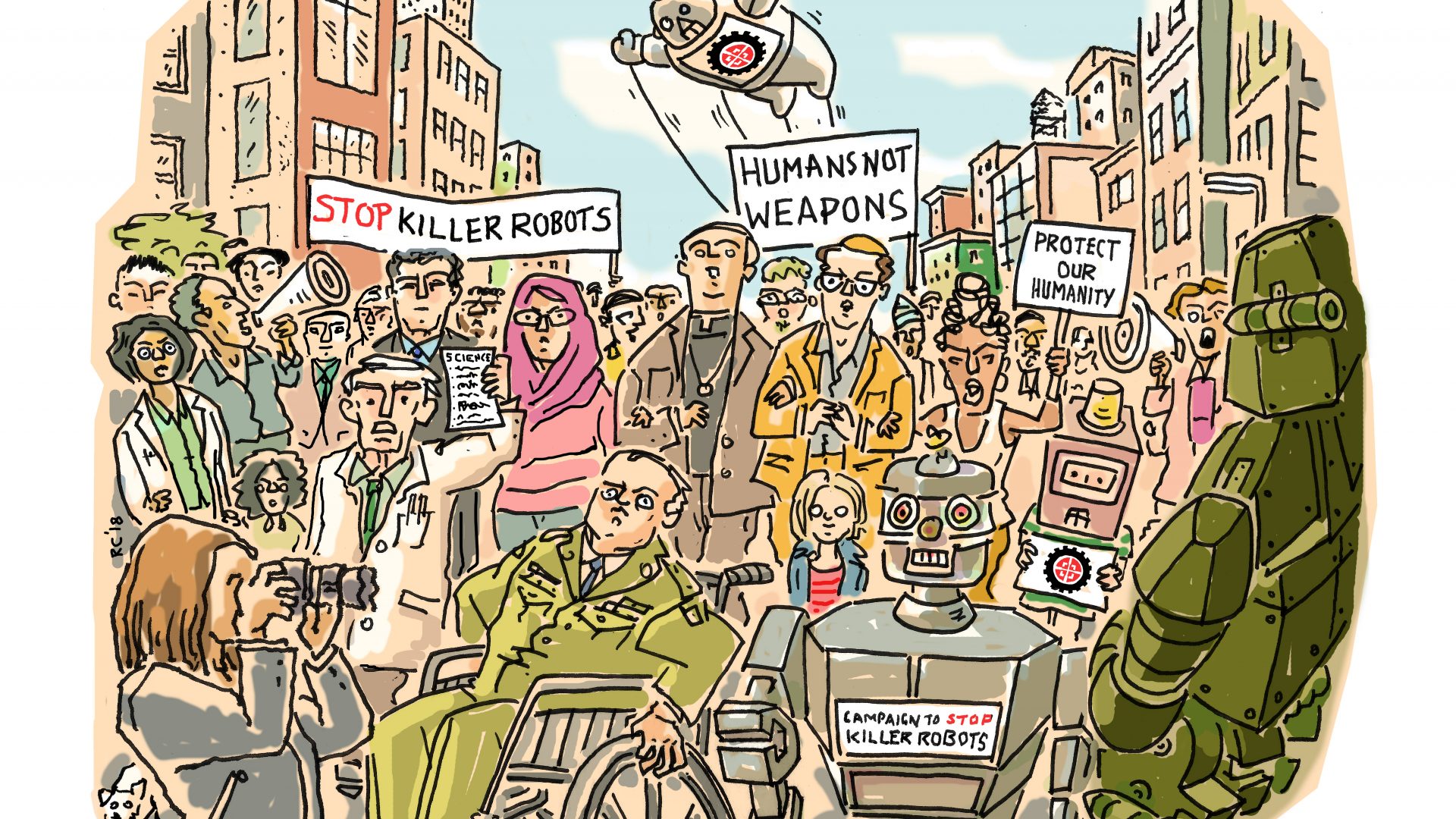

A group of more than 4,000 anonymous Google employees has fittingly been named 2018 “Arms Control Person of the Year” for their role in helping the company to end a military contract that raised ethical and other concerns over the appropriate use of artificial intelligence and machine learning. The Campaign to Stop Killer Robots applauds the employees’ actions for contributing directly to Google’s subsequent commitment not to “design or deploy” artificial intelligence for use in weapons.

Other companies should follow Google’s example, stay out of the business of fully autonomous weapons, and support the negotiation of a ban treaty.

Tech workers from Google became allies of the Campaign to Stop Killer Robots over the course of 2018 and especially after the publication in April of the letter demanding the company commit to never build “warfare technology.”

Tech workers will be contributing to the Campaign’s activities in 2019, including a global campaign meeting in Berlin on 21-23 March to build support for the goal of a treaty to retain meaningful human control over the use of force and thereby prohibit weapons systems that lack such control.

Details of Google’s involvement in Project Maven, a US Department of Defense-funded program that sought to autonomously process video footage shot by surveillance drones, first came to light in early 2018. Kate Conger, then at Gizmodo and now with The New York Times, reported on the issue as it unfolded as did other tech journalists.

In March, the Campaign coordinator wrote to the heads of Google and Alphabet to recommended the companies adopt “a proactive public policy” committing to never engage in work aimed at the development and acquisition of fully autonomous weapons systems. The Campaign urged the companies to publicly support the call to preemptively ban such weapons.

The Campaign also questioned Google’s involvement in a US Department of Defense-funded program that sought to autonomously process video footage shot by surveillance drones. It warned that Project Maven’s AI-driven identification of objects could quickly blur or move into AI-driven identification of “targets” as a basis for the use of lethal force. This could give machines the capacity to determine what is a target, which would be an unacceptably broad use of the technology.

In May, more than 800 scholars, academics, and researchers who study, teach about, and develop information technology released a statement in solidarity with the Google employees. They called on the company to support an international treaty to prohibit autonomous weapon systems and commit not to use the personal data that Google collects for military purposes.

A Guardian article published in May by Peter Asaro and Lucy Suchman of the International Committee for Robot Arms Control, a co-founder of the Campaign, urged the company to consider key questions, such as: “Should it use its state of the art artificial intelligence technologies, its best engineers, its cloud computing services, and the vast personal data that it collects to contribute to programs that advance the development of autonomous weapons? Should it proceed despite moral and ethical opposition by several thousand of its own employees?”

Days before announcing the ethical principles in June 2018, Google executives said the company would not renew its participation in Project Maven. In October, the company said it would not bid on a ten billion dollar Department of Defense cloud computing contract, reportedly due to concerns that doing so would conflict with the ethical guidelines.

In August 2018, a software engineer from Google spoke about the tech worker actions in his personal capacity at a campaign briefing for the Convention on Conventional Weapons meeting on lethal autonomous weapons systems. Amr Gaber gave a powerful account of the concerns held by tech workers with not only military applications of the technology that they work on, but its use in domestic policing and surveillance.

A tech worker from Dublin spoke at the Campaign’s briefing for CCW delegates in November. Just before the meeting, Laura Nolan published a piece in the Financial Times elaborating on the groundswell of dissent as thousands of employees at Google, Microsoft, Amazon and elsewhere push back against projects and personnel decisions they consider unethical.

The Campaign to Stop Killer Robots coordinator also wrote to Amazon’s Jeff Bezos in early 2018 after he expressed concern at the possible development of fully autonomous weapons, which he described as “genuinely scary,” and suggested a new treaty to help regulate them. The Campaign to Stop Killer Robots welcomed the remarks and encouraged Amazon to pledge not to contribute to the development of fully autonomous weapons and to publicly endorse the call for a new ban treaty. No response was received.

The Arms Control Association plans to mark the award at its Annual Meeting in Washington DC on 15 April 2019.

See also “Google, other companies must endorse ban” post, May 2018